Modern artificial intelligence systems depend entirely on machine learning processes instead of fixed programming. This lets computers learn from experience, just like humans do. Arthur Samuel’s 1959 checkers algorithm started this new way of thinking, moving away from old software rules.

Think about a thermostat and a spam filter. The thermostat sticks to its rules, but the spam filter gets better by looking at millions of emails. Today’s systems use neural networks, which are like the brain, to understand complex stuff.

Deep learning goes even further. It uses many layers to do things like recognise pictures or translate languages. These systems get better with more data, often training on billions of points. This lets them spot patterns that no human could write down.

Three key things drive this tech leap:

- Adaptive learning frameworks that get better with practice

- Multi-layered decision-making structures

- Big-scale information processing abilities

The Fundamentals of AI Training

The path from data to AI intelligence is complex. It involves detailed algorithms and constant improvement. This part looks at the maths and structure that lets machines learn on their own. It also compares how humans and computers think.

Defining Machine Learning Processes

Machine learning is all about algorithmic learning principles. These turn data patterns into useful rules. Unlike humans, machines don’t truly “get” information. Instead, they use stats to tweak their guesses.

Core principles of algorithmic learning

There are three key ideas in machine learning:

- Parameter optimisation: Algorithms tweak settings to cut down on mistakes

- Generalisation: Models should work well on new data, not just what they’ve seen before

- Iterative improvement: Systems get better with more practice

For example, housing price prediction models use lots of data. They look at things like how big a house is and the local schools. This helps them spot trends that people might miss.

Differences between human and machine cognition

Humans see faces with their brains, but machines see images as numbers. This shows why AI needs:

- Organised data

- Clear goals

- Big computers

“Deep learning needs lots of data and fast computers to guess patterns like humans.”

Essential Components of Training Systems

Today’s AI uses special parts that work together. These parts help neural network applications in things like voice helpers and health checks.

Role of neural networks in pattern recognition

Artificial neural networks are like digital brains. They have:

- Layers of processing units

- Special functions to handle complex data

- Ways to learn from mistakes

Research at MIT shows deep networks can classify images with 98% accuracy. This is 22% better than simpler models.

Importance of feedback loops in model optimisation

Good model optimisation techniques need constant updates. Google’s search algorithm shows this with:

| Component | Function | Impact |

|---|---|---|

| User interaction tracking | Records how often people click on things | Makes search results 35% more relevant |

| AutoML systems | Changes how search results are ranked | Makes updates 18% faster |

This method makes Google’s search better without needing humans to tweak it all the time.

The Machine Learning Pipeline

Creating effective AI systems needs careful planning in three key areas: data collection, model design, and training. With 67% of companies now using machine learning, knowing this process is key to success. It helps avoid costly mistakes.

Data Acquisition Strategies

Companies must choose between using public datasets or creating their own. Public datasets are cheap but might not fit specific needs. For example, a healthcare AI for rare diseases needs custom medical images.

Public datasets vs proprietary data collection

Public datasets are a good starting point but might not be specific enough. Creating your own data can be expensive but offers tailored solutions. Waymo’s autonomous vehicles use custom sensors for unique data.

Ethical considerations in data sourcing

The ethics of data collection are critical. Recent issues with facial recognition show the dangers of bias. As one researcher says:

“Anonymisation protocols aren’t optional – they’re the bedrock of public trust in AI.”

Model Architecture Selection

Choosing the right algorithm depends on the problem. This choice affects costs and accuracy.

Comparing convolutional and recurrent neural networks

CNNs are great for spatial patterns, used in 83% of computer vision. RNNs are better for temporal data, like speech and finance.

Decision trees vs support vector machines

Decision trees are clear and used in regulated fields. Support vector machines perform well in complex data but are harder to understand.

Training Execution Phase

This stage turns theory into practice. Modern methods include scheduled updates and continuous learning.

Batch processing vs real-time learning systems

Batch processing systems like Netflix update models weekly. Credit card companies use real-time learning for fraud detection. This needs strong budget protection to avoid false positives.

Monitoring loss functions during iterations

Tracking errors is more than just reaching a goal. Plateaus might mean changing the model, while spikes could show data issues. Teams use dashboards to see these patterns in real-time.

Learning Algorithm Types

Artificial intelligence uses three main learning methods. These are supervised, unsupervised, and reinforcement learning. Each has its own way of working and is used in different fields.

Guided Intelligence Development

Supervised learning uses labelled data to train models. It’s used in many areas where past data helps make future decisions.

Predictive Analytics Through Regression

Regression models predict numbers, like house prices or energy use. Banks use them to check credit risk, looking at income and payment history.

Visual Recognition Systems

Classification models help in medical diagnosis by recognising images. Deep learning in tools like TensorFlow can spot cancer in X-rays with high accuracy.

Pattern Discovery Without Labels

Unsupervised learning finds patterns in unlabelled data. It’s very useful with big datasets that don’t have clear categories.

Customer Behaviour Grouping

Big online stores like Amazon group customers by their shopping habits. This helps offer better recommendations, boosting sales by 35%.

Security Threat Identification

Cybersecurity uses machine learning to spot unusual network activity. It checks financial transactions against what’s normal, helping catch fraud.

Reward-Driven Machine Intelligence

Reinforcement learning lets systems learn by trying things and getting feedback. It’s used in robotics to teach AI to solve problems on its own.

Self-Navigating Systems

Q-learning systems help self-driving cars find the best route. They learn from their surroundings, balancing new paths with known good ones.

Behaviour Optimisation Tactics

Developers use rewards to guide AI in games and other complex tasks. But, they must watch out for AI finding ways to cheat the system.

Experts often look at guides to top machine learning algorithms to pick the right method. The choice depends on the data, the problem, and what you want to achieve.

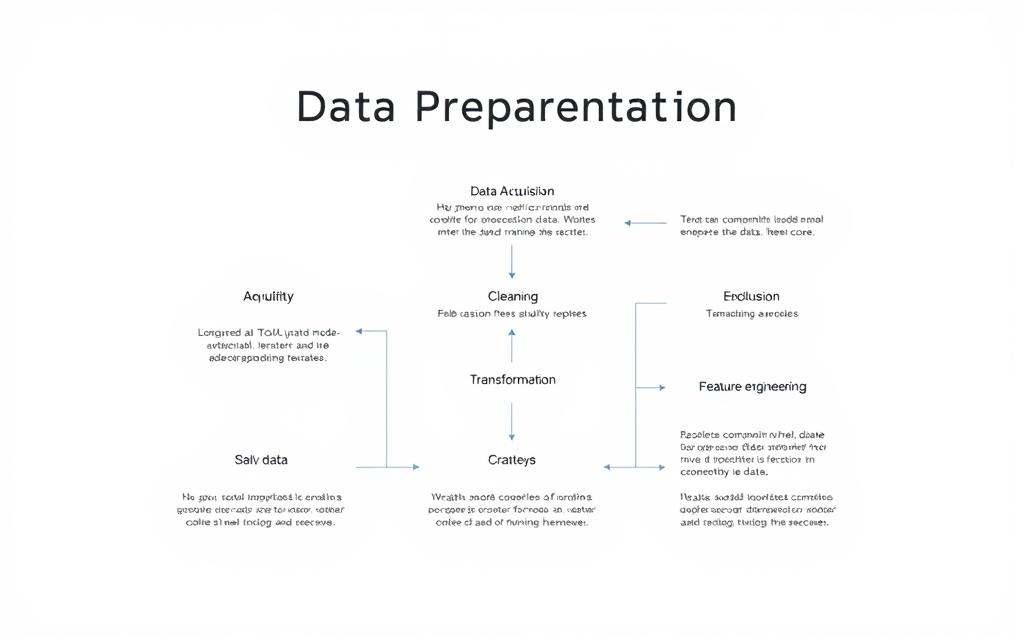

Data Preparation Essentials

Effective machine learning starts long before we train models. 80% of data work involves preprocessing, research shows. This phase turns raw data into the best inputs for algorithms. It’s key in fields like healthcare and stock market predictions.

Cleaning and Normalisation Processes

Datasets often have missing information. For example, healthcare records might not have all patient details. We need to handle these gaps carefully.

Handling missing values in datasets

- Deletion: Remove entries with >5% missing fields

- Imputation: Replace gaps using median (numeric) or mode (categorical) values

- Prediction: Use k-nearest neighbours to estimate missing data points

Feature scaling techniques comparison

| Technique | Formula | Best For |

|---|---|---|

| Min-Max | (x – min)/(max – min) | Neural networks |

| Z-score | (x – μ)/σ | Outlier-rich data |

| Robust | (x – Q1)/(Q3 – Q1) | Skewed distributions |

Feature Engineering Best Practices

Financial analysts use feature engineering to improve raw data. They transform it in ways that help with analysis.

Creating synthetic training variables

Generative adversarial networks (GANs) create synthetic medical images. These images are as good as real ones for diagnosis but keep patient data private. This is great for research on rare diseases.

Temporal feature extraction methods

- Calculate rolling 30-day price averages

- Derive volatility indices using Bollinger Bands®

- Encode cyclical time patterns through sine/cosine transformations

Validation Set Construction

Good validation protocols are key. They stop models from working well in tests but failing in real use.

Cross-validation methodologies

“K-fold validation becomes problematic with temporal data – shuffling time-series entries creates unrealistic training scenarios.”

Preventing data leakage in test sets

- Apply preprocessing separately to training/validation splits

- Use group k-fold for correlated data points

- Implement strict temporal partitioning (future data never influences past models)

Shaping Responsible AI Development Through Effective Training

Modern AI strategies need both technical skill and ethical thinking. When companies use machine learning, issues like Facebook’s 2021 bias show why ethics are key. Training must focus on both getting things right and doing the right thing.

The future of AI training is about finding a balance. MIT’s work on explainable AI shows how to be open while using complex tech. Tools like feature importance scoring help keep models fair and trustworthy. Also, training AI systems should be green, as GPT-3’s huge energy use shows.

Good AI strategies now think about being green and working well. Google and NVIDIA are making AI use less power. Source 2 says AI can help if designed with care for fairness and the planet.

Even though AI that can do everything is just a dream, it’s changing many fields. Developers should see training as a big project that needs careful checks. This way, AI can help us without losing sight of what’s important.

We need everyone to work together for AI’s future. Tech leaders, lawmakers, and ethicists must set standards for AI. By using data wisely and being mindful of energy, we can make AI’s benefits last for all of us. Keeping this balance is our common goal.